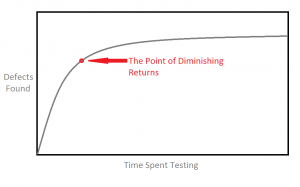

I don’t imagine it’s a stunning revelation to say testing has a point of diminishing returns (which isn’t to say testing is not vital, even central to software development). This is what I’ve come up based on my experience – hopefully someone out there can point me to more official research:

Note that there is a geometric progression here. The first few defects do not take very long to find. Then it gets harder and harder to find more defects. Bugs 1-10 might take you an hour. The 100th bug by itself might take you 10 hours.

Where exactly the point of diminishing returns lands on this curve depends on a bunch of things of course. First and foremost would be if there are any lives at stake. For example, if you’re testing body armor for the US military, you would think it would be quite a ways up and to the right. (Evidently though – it’s not.) In software, medical applications that could result in someone’s life being saved might qualify.

Next might be money issues, such as banking applications. You wouldn’t want to go live with a beta version of software that, for example, manages bank accounts, account transfers, and the like. (These people know that now.)

Most Web applications on the other hand are a little to the left of the far-right edge of the chart. No one is going to live or die if someone can or cannot post a MySpace comment. Ecommerce applications such as SoftSlate Commerce do have to deal with money, and so – especially those parts of it involved in payment transactions and the like – should rightfully take special attention.

But besides how mission-critical the code in question is, there are other factors:

- Would defects in the code be easy to recover from? (Some defects come out of left field and take forever to fix, but most of the time, the developer has a gut feeling about what the nature of the defects would be and can make a judgement.)

- Is the code isolated enough that it would not have cascading effects? (An argument for modular development.)

- What is the client’s tolerance for risk on a human level? (Well, it matters!)

- Would additional testing delay deploying the feature beyond an important date?

- Would additional testing cause the feature to suffer from marketing amnesia? (In which case, maybe it shouldn’t have been developed in the first place!)

- Are all hands going to be available if a defect is discovered? (There is a tendency to want to deploy big projects in the middle of the night, when there are as few users as possible. While that makes sense sometimes, we prefer to launch big features at the beginning of a regular workday, when everybody is around, alert, and ready to help. Definitely not on Friday at 5:30pm!)

- Is the feature being launched with a lot of ballyhoo? (Prefer “soft launches” if you don’t have as much time to test.)

You might think it’s professional negligence to say all this about testing – that one should always strive for perfection, zero defects, in which case the above factors shouldn’t matter. Our time, and our clients’ money is too valuable to waste it testing to an extreme. Yes, our clients deserve excellent software, but they also deserve us to be smart about how we achieve it. For example, a couple of the most cost-effective testing techniques we do regularly include parallel ops and automated functional testing. Those are good ways to catch more defects without going beyond the point of diminishing returns. With the time left over, we can make the software better in much more certain, tangible ways.

In my experience in the hardware world, I would say that you are roughly correct,

testing does have diminishing results over time.

However, they are techniques that can greatly increase how many bugs you find, and make the curve look more 1:1.

In particular, pseudo-randmon testing is very interesting. It allows you to hit hard-to-think corner cases easily. The problem with it, is that to do it properly you not only need a custom-built language to randomize all the inputs, but you also need a reference model (which are easy to do for simple tests, but very hard for the whole system), and functional coverage.

Without coverage, your random testing is blind, and there is now real way of knowing what is it exactly that you are testing. Code coverage helps here, but to truly understand what your random tests are doing you need a more elaborate way of measuring what is happening in your application. have I executed this function with all the relevant values for the inputs? have I executed this function AFTER a calling this?

The hardware world solved this problems with the creation of specific languages generating random stimulus and for capturing your functional “coverage points” and “coverage groups”, and by developing a series of ever-refined methodologies to achieve this.

Take a look at specman (e language), System Verilog and SystemC verification library.

Byte shift testing is the best testing methodology. It can be done during the entire development frame of the project and when deployed can be used to administer alterations and changes to the deployment.

As a general statement, I agree that as you do more testing, you get less value out of the results. A more important observation, in my opinion, is that all tests are not created equally.

Instead of focusing on the number of tests, focus on ROI based thinking. Also, you haven’t even discussed manual versus automated tests. Regression testing versus initial testing (many edge cases are worth testing once, but if are in a static area of code, may not justify testing again). X-ability testing.

All of these things are far, far more important than finding that point of diminishing value in tests because your metric, number of tests, isn’t the best way to think about the challenge of assuring quality. It’s just another metric to help you balance your efforts and judge your effectiveness.

If the return on testing is finding bugs, then yes there are diminishing returns. If the return on testing is confidence in knowing there are very few bugs, then I don’t think that curve holds.

Obviously, we can’t test every line of code all the time. However, we use our clients to test frequent beta releases to get feedback we’d never get from ourselves. The non-technical domain expert will always find things you can’t find in-house.

Testing is always going on, just because the guys/gals writing the software aren’t doing it doesn’t mean it’s not happening.

I’m testing Firefox right now as I write this comment.

I think it’s important to separate the time/resource cost spent uncovering bugs from the cost/tradeoff spent fixing and retesting them.

Defect tracking systems are glutted with years-old bugs that will never be fixed: some are related to Browsers, OS, and hardware platforms no longer supported. Others involve features slated to be obsoleted and/or circumvented by new features.

Bug statuses and priorities are often misleading or meaningless from a cost perspective: To the tester who specializes in obscure administrative functions a bug may scream showstopper, yet the field and support engineers know for a fact that no customer has come within miles of that particular function. How many “cosmetic” bugs have been pushed to the top of the queue because they’re in highly visible locations that customers (and demo-ing salepeople) are sure to notice.

This is why managers who reduce everything to bug counts aren’t doing anyone any favors.

The concept of “good enough” quality for software has been around for a while, and is a formal description of your observations here. See http://www.satisfice.com/articles/gooden2.pdf for an example.

In my experience, it seems that most programmers test to make something work, not to break it. I have never experienced the diminishing return, but the other side where bare bones testing is done. It seems directly proportional to the programmers ego, that being, my code is infallible since I wrote it, so there is no way it could break. Or, testing is tedious, so pass it on.

Having done quite a bit of testing myself when I worked in support/custom development for a West coast software company (Not Microsoft) I can tell you that your metrics about diminishing returns match up with what I recall during my testing. While we all would like to have perfect code there is no such thing and so you have to go with good enough. That said, there is one thing that the testing department (at least where I worked) could and should observe and did not (for whatever reason) and that is :

Never assume the way you (as a tester or developer or even someone in support) use the product is how a customer does or even how most of your customers do.

The biggest mistake I observed was the mindset (shared by t9o many at that company ) of “that’s how everyone does it” and that resulted in more bugs getting into production code then should have.

The graph is incorrect. The pint of diminishing returns is an inflection point, where the first derivative is at a maximum.